Vasileios Mezaris

Electrical and Computer Engineer, Ph.D.

| homepage | curriculum vitae | projects | research | demos | downloads | publications | contact | m r d b c p |

| SOFTWARE | DATASETS |

|

S-VideoXum: a dataset for Script-driven Video Summarization. We introduce S-VideoXum, a dataset derived from the existing VideoXum summarization dataset. To make VideoXum suitable for training and evaluation of script-driven video summarization methods, we extended it by producing natural language descriptions of the 10 ground-truth summaries that are available per video. These serve as the scripts that can drive the summarization process. S-VideoXum is made of 119,080 scripts corresponding to 11,908 videos (split in 6,782 videos for training, 3,419 for validation and 1,707 for testing). - Related publications [acmmm2025] - Dataset download from [github] |

|

|

TSV360 dataset for text-driven saliency detection in 360-degrees videos. TSV360 is a dataset for training and objective evaluation of text-driven 360-degrees video saliency detection methods. It contains textual descriptions and the associated ground-truth saliency maps, for 160 videos (up to 60 seconds long) sourced from the VR-EyeTracking and Sports-360 benchmarking datasets. These datasets cover a wide and diverse range of 360-degrees visual content, including indoor and outdoor scenes, sports events, and short films. - Related publications [cbmi2025a] - Dataset download from [github] |

|

|

360-VSumm dataset. We introduce a new dataset for 360-degree video summarization: the transformation of 360-degree video content to

concise 2D-video summaries that can be consumed via traditional devices, such as TV sets and smartphones. The dataset includes ground-truth human-generated summaries. We also present an interactive tool that was developed to facilitate the data annotation process and can assist other annotation activities that rely on video fragment selection. - Related publications [imx24] - Dataset download from [github] |

|

|

VADD dataset. We introduce the Visual-Audio Discrepancy Detection (VADD) dataset and experimental protocol, curated to facilitate research in detecting discrepancies between visual and audio streams in videos. The dataset includes a subset of videos in which the visual content portrays one class (e.g., an outdoor scenery), while the accompanying audio track is sourced from a different class (e.g., the sound of an indoor environment). - Related publications [icmr24a] - Dataset download from [github] |

|

|

RetargetVid: a video retargeting dataset. We provide a benchmark dataset for video cropping: annotations (i.e., ground-truth croppings in the form of bounding boxes), for two target aspect ratios (1:3 and 3:1). These annotations were generated by 6 human subjects for each of 200 videos. The actual videos belong to the already-publicly-available DHF1k dataset. - Related publications [icip21] - Dataset download from [github] |

|

|

Lecture video fragmentation dataset and ground truth. We provide a large-scale dataset consisting of artificially-generated lectures, and the corresponding ground-truth fragmentation, for the purpose of evaluating lecture fragmentation techniques. Overall, our dataset contains 300 artificially-generated lectures of about 120 minutes each, totaling about 600 hours of content. - Related publication [mmm19] - Dataset download from [zenodo] or [github] |

|

|

Annotated dataset for sub-shot segmentation evaluation. We provide a video dataset with ground-truth sub-shot segmentation for 33 single-shot videos, for the purpose of evaluating algorithms performing temporal segmentation of video to sub-shots. The ground-truth segmentation was created by human annotators. Overall, our dataset contains 674 sub-shot transitions. - Related publication [mmm18] - Dataset download from [zenodo] or [mklab site] |

|

|

Concept detection scores for IACC.3. We provide a complete set of concept detection scores, using our state of the art concept detectors, for the videos of the IACC.3 dataset used in the TRECVID AVS Task from 2016 and on (600 hr of internet archive videos). Concept detection scores for 1345 concepts (1000 ImageNet concepts and 345 TRECVID SIN concepts) have been generated using two different methods. - Related publications [mm16] [mmm17] - Dataset [download dataset] |

|

|

Concept detection scores for MED16train. We provide concept detection scores for the MED16train dataset, which is used in the TRECVID Multimedia Event Detection (MED) task. Scores for two concept sets (487 sports-related concepts of the YouTube Sports-1M Dataset, and 345 TRECVID SIN concepts) have been generated for video keyframes, at a temporal sampling rate of 2 keyframes per second. - Related publications [trecvid16] [mmm17] - Dataset [download dataset] |

|

|

The CERTH-ITI-VAQ700 dataset. We provide a comprehensive video dataset for the problem of aesthetic quality assessment of user-generated video. The dataset includes i) 700 videos in .mp4 format, ii) video features suitable for aesthetic quality assesssment, that we extracted, and iii) ground-truth aesthetics annotations (both by each individual annotator, and consensus results), generated by 5 annotators per video. - Related publication [icip16] - Dataset [download dataset] |

|

|

The 2015 and 2014 Synchronization of Multi-User Event Media datasets. The datasets, ground-truth time-synchronization results and corresponding evaluation script that were created and used in the 2015 and 2014 editions of the Synchronization of Multi-User Event Media (SEM) task of the MediaEval benchmarking activity are available for download. The datasets comprise multiple galleries of images, videos and audio recordings per event, for several real-life events attended by multiple individuals. - Related publications [mediaeval14] [mediaeval15] - SEM2014 dataset [download dataset] - SEM2015 dataset [download dataset] |

|

|

The 2014 Social Event Detection dataset. The dataset, challenge definitions, ground truth challenge results and corresponding evaluation script that were created and used in the 2014 edition of the Social Event Detection (SED) task of the MediaEval benchmarking activity are available for download. The SED2014 dataset has two parts, the first containing 362,578 images belonging to 17,834 events, and the second containing 110,541 images. - Related publication [mediaeval14] - SED2014 dataset [download dataset] |

|

|

The CERTH Image Blur Dataset. The dataset consists of 2450 digital images (1219 undistorted, 631 naturally-blurred and 600 artificially-blurred images), divided to a training set and an evaluation set. This dataset is used for the evaluation of image quality assessment methods (specifically, methods detecting blurring in images). An important feature of it is that it contains naturally-blurred images as well, rather that only artificially-blurred ones. - Related publication [icip14] - Dataset [download dataset] |

|

|

The 2012 Social Event Detection dataset. The dataset (167.332 images), challenge definitions, ground truth challenge results and evaluation script that were created and used in the 2012 edition of the Social Event Detection (SED) task of the MediaEval international benchmarking activity are available for download. The SED task requires participants to discover social events and detect related media items in a collection of images that are accompanied by metadata typically found on the social web (including time-stamps, tags, geotags for a small subset of them). Finding the events, in this task, means finding a set of photo clusters, each cluster comprising only photos associated with a single event (thus, each cluster defining a retrieved event). The dataset and the related materials are publicly available for non-commercial use. - Related publication [mediaeval12] - SED2012 dataset [download dataset] |

|

|

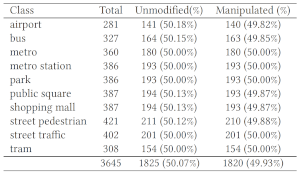

SCEF image dataset for spatial context evaluation. A dataset used in the evaluation of object-level spatial context techniques is available for download. It compises 922 outdoor images of various semantic categories, annotated at the region level with 10 different concepts. The materials available for download include a) the images, b) the image segmentation masks, c) region-level manual image annotations, d) a set of extracted low-level features, e) a set of computed fuzzy directional spatial relations, and f) a set of region classification results based solely on visual information. The dataset is publicly available for non-commercial use. - Related publications [wiamis09] [cviu11] - Image dataset [download dataset] |

|