Vasileios Mezaris

Electrical and Computer Engineer, Ph.D.

| homepage | curriculum vitae | projects | research | demos | downloads | publications | contact | m r d b c p |

|

ONLINE VIDEOS & DEMOS | |

|

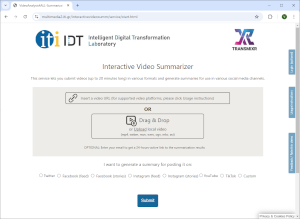

Interactive video summarizer. This web demo lets you submit videos in various formats and generate summaries for use in various social media channels. It uses our homegrown deep learning techniques for automated video summarization and aspect ratio transformation, combining them with an interactive user interface that allows the user to have editorial control over the final video summary. Try the demo with your choice of videos. |

|

|

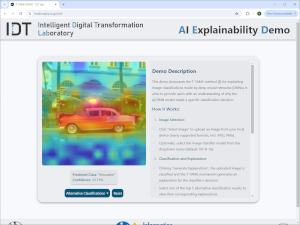

AI Explainability demo. This web demo showcases the T-TAME method for explaining DNN-based image classifiers. You can upload an image from your local device and select the image classifier model to be used from a dropdown menu (supported: ViT-B-16, ResNet50, VGG16); then, view the classification decisions and the corresponding explanations (heatmaps) for the top-5 detected image classes. Try the demo with your own images. |

|

|

On-line video summarization. A web service that lets you submit videos in various formats, and uses a variation of our SoA Generative Adversarial Learning (GAN) summarization methods to automatically generate video summaries that are customized for use in various social media channels. Watch a 2-minute tutorial video for this service, and make the most out of your content by generating your own video summaries. |

|

|

On-line video smart-cropping. A web service similar to the summarization one, however adapting only the aspect ratio of the input video without changing the video's duration. The input video can be of any aspect ratio, and various popular target aspect ratios are supported. Try the service with your own videos. |

|

|

On-line video fragmentation and reverse image search. This service allows the user to extract a set of representative keyframes from a video, and to use these keyframes for performing reverse image search with the help of the Google Image Search functionality, in order to find out if this video has appeared before on the Web. To submit a video for analysis the user can either provide its URL (many online video sources are supported), or upload a local copy of it from his/her PC. Try the service yourself. |

|

| On-line video analysis and annotation services. We have developed several on-line services for the analysis and annotation of audio-visual material. |

|

|

Our latest interactive web service (v5.0; released in February 2020) lets you upload videos in various formats, and performs shot and scene segmentation as well as visual concept detection with the YouTube-8M concepts. The processing is fast (several times faster than real-time video processing). The results are displayed with the help of an interactive user interface, offering various fragment-level navigation, playback and search functionalities. Watch a tutorial video for the service (another video, featuring an older version of the UI, is available here), and try the v5.0 of the service, or the previous v4.0 (using a different concepts set) with your own videos. |

|

|

In another version of this service, which is designed for machine-to-machine (rather than machine-to-human) interaction, the results are returned in the form of a machine-processable MPEG-7-compliant XML file. You can also test this service yourself, with your videos (but please contact us for this). An information video, explaining how this service works, is available.

Watch the video |

|

|

Finally, we have developed, as part of the LinkedTV research project and in collaboration with other LinkedtV partners, a more complete version of the on-line service that, given an input video, performs visual analysis (shot segmentation, chapter/topic segmentation, concept detection, face detection & tracking), audio analysis (speech & speaker recognition) and text analysis (keyword extraction). An information video, explaining how this service works, is also available. Watch the video |

|

|

Multi-user photo collection synchronization and summarization. This video shows our method for synchronizing photo galleries of the same event (e.g. a sports event, a festival) that were captured by different people, and summarizing the event using all different photo galleries of it. Watch the video |

|

|

Personal image analysis and organization. We have built, as part of the ForgetIT project, interactive demos of image quality assessment, visual concept detection, clustering and image collection summarization (they work with all mainstream browsers, but may require a recent browser version): http://multimedia2.iti.gr/ForgetIT/images_varioustravels/demonstrator.html and http://multimedia2.iti.gr/ForgetIT/images_Costarica/demonstrator.html |

|

|

Object re-detection in video. We have developed a method for the fast re-detection and localization of objects in video, using local visual features. Watch the video |

|

|

Video segmentation to shots. We have developed methods for the quick temporal segmentation of video, detecting both abrupt and gradual shot transitions and effectively handling effects such as photographers' flashes. Watch the video |

|

|

Lecture video analysis. We have built, as part of the MediaMixer project, an interactive online demo of video segmentation to shots and visual concept detection for lecture videos. You can access the demo at http://multimedia2.iti.gr/mediamixer/demonstrator.html (best viewed with Firefox) |

|

|

Personalization. We have built, as part of the LinkedTV project, an interactive online demo of content and concept filtering for multimedia by means of semantic reasoning. Watch the video. |

|

|

GPU-accelerated LIBSVM. We have developed an open source package for GPU-assisted Support Vector Machines (SVMs) training, based on the LIBSVM package. Watch the video |  |